I’ve had the pleasure of attending the Deconstruct conference in Seattle. The first day has just wrapped up and these are a recap of the talks.

2 Factor, 4 Humans by Karla Burnett

Karla spoke on the various forms of two factor authentication and the problems each have.

Account Takeovers are a very common problem. People reuse passwords across services. When one service is compromised, accounts on other services can be also be easily compromised. Password managers are difficult for non-technical people to use.

2FA has significant usability problems. Too complex for many people to use successfully and it can deter people from using a service.

There are four types of 2FA:

#1 Time Based

Examples: RSA SecurID, Google Authenticator

Secret + Current Time = six digit code

#2 SMS

Google introduced this method since at the time not everyone had smart phone.

Vulnerable from hackers switching phone number to new device. This is what caused the large iCloud hack a few years ago that targeted celebrities.

#3 Mobile App

Example: Duo

Users can still be phished. User might login to site that looks real but is a “fake” site controlled by hackers. User enters password which hacker forwards to true service and triggers push notification which user approves without a second though.

#4 Security Key

Example: Yubikey

Secret + Domain + Nonce is used to authenticate. Including domain means a “fake” site like in #3 couldn’t intercept the user’s credentials.

Expensive. Not feasible for the mass population.

Very U.S. centric. Security keys can be very difficult to acquire in other countries.

All 2FA methods have problems. They hurt usability and frustrates users.

Think about usability when adding 2FA and deciding which options to include.

Do threat modeling for your service and users. Consider what your service does and the risk involved. Is there PII, banking information, etc?

For many services, SMS 2FA sufficiently reduces risk without introducing too much friction for users.

Multiplayer Game Networking by Ayla Myes

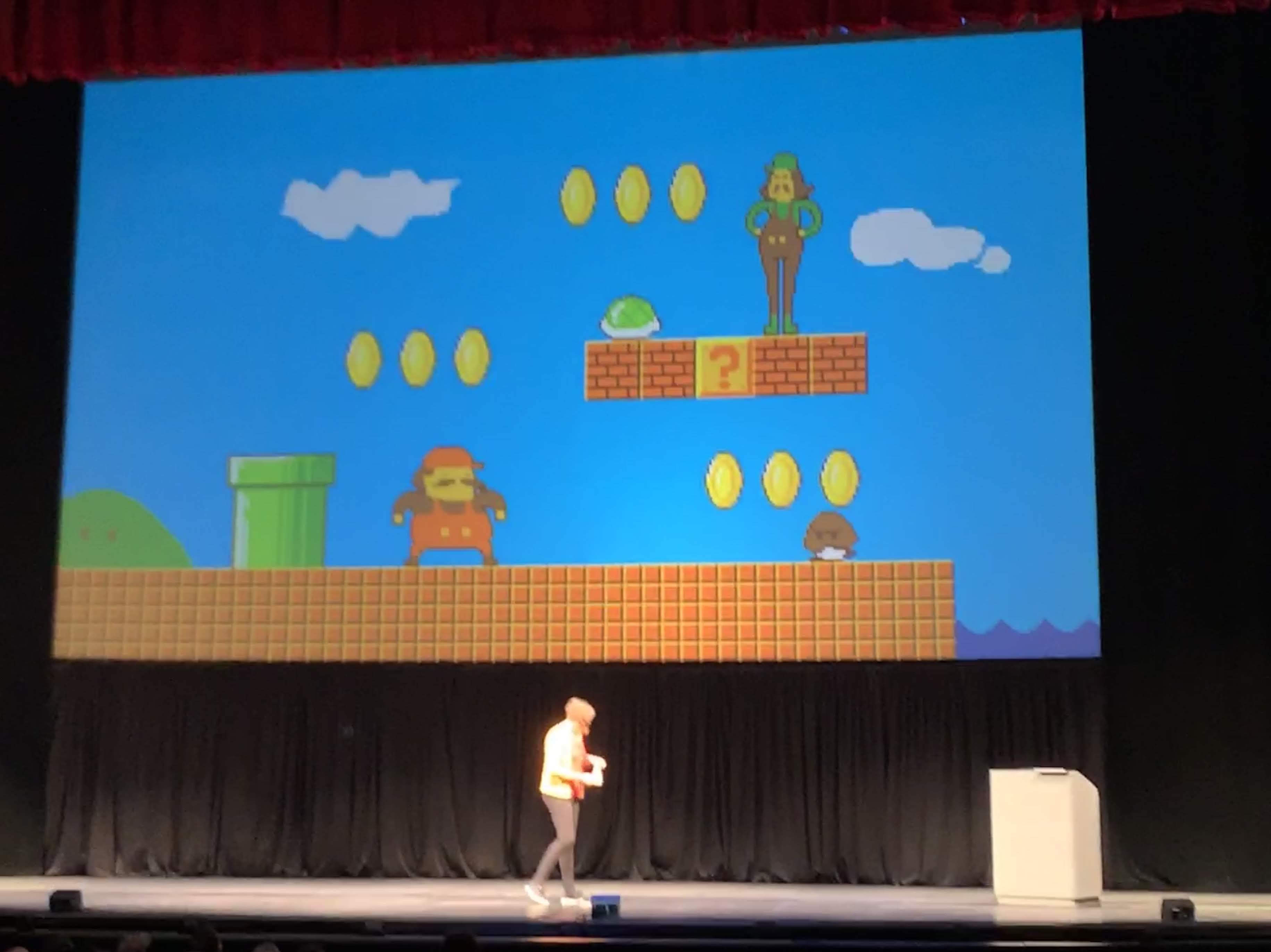

Ayla gave an incredible talk on her journey to build a multiplayer game and understand the networking behind it.

She walked us through three techniques she tried in building a multiplayer game and finally succeeded by applying all three.

The slides she created were particularly fun and creative.

Techniques she tried. Imagine a shooting game.

#1 Clients send the location of bullets they fire.

Clients can interpret the locations different. Client #1 thinks they hit something but client #2 thinks they dodged.

Latency is the biggest problem. User hits button to jump which is sent to server. Server responds with results. But networks are slow and there could be 500 milliseconds before response is received. This results in delay for user. E.g. jump button pressed….wait….then you see character jump on screen.

#3 Client can predict future state

Client and server both run copies of the game.

Server has to confirm decisions by clients and can overrule.

Server has to decide when two different clients predict different results. Very complicated! Server has to rewind the actions of clients to figure out the solution.

PICO-8 is a “fantasy” console where you can build your own games.

Jepsen 11: Once More Unto The Breach by Kyle Kingsbury

Jepsen is a library used to verify claims that vendors make of the databases, queues, etc. Kyle walked us through three databases and the significant problems found in each one.

FaunaDB, YugabyteDB, and TiDB.

Jepsen works by doing many operations in a DB such as inserting random values and reading them back out. It will introduce faults to the DB like simulating power failure of a node. Jepsen has been used to find bugs like simulated banks accounts returning incorrect.

Vendor claims have often found to be wrong.

Recommendations:

- Read the DB docs very carefully!

- Docs can be wrong so verify the claims.

- Consider failure modes – e.g. what happens if power is lost

- No perfect DB

Identifying Mushrooms Like a Prolog by Josh Cox

Josh’s hobby is mushroom hunting. He also likes to learn new programming languages and finds them all unique. He used Prolog to help him identify mushrooms.

There are many Prolog variants but he is using SWI Prolog.

Prolog is a logic programming language, far different from most languages like Javascript.

It has a concept of facts and rules.

I’ve never used Prolog but it appears to be somewhere between and database and rules engine.

After you define the facts and rules you can query Prolog and it’ll work out the logic of how to respond.

Josh encoded multiple facts about mushrooms. For example, a mushroom might be represented by the facts cap shape, spore color, gill type, etc.

Please Inline This Abstraction by Dan Abramov

This was a very practical talk about the dangers of abstractions. Code duplication is often the better approach due to its improved maintainability. Eventually, an abstraction may be better we need to weigh the pros and cons and ensure we don’t make a bigger problem down the road.

Problems with abstractions:

Fix for bugs in abstractions means you need to verify it doesn’t introduce bugs in any of the consumers of the abstraction.

From spaghetti code the “lasagna code” - meaning you end up with many layers in your code that is hard to follow and understand.

Complex abstractions have inertia. As they grow and get more complex and get used more, it takes more and more time/effort to unwind and remove.

Recommendations

If you have an abstraction, don’t just unit test it, but test where the value is. E.g. if you have an abstraction used in many React components, test its use in each React components. Will help you identify when changes in abstraction introduce bugs in components.

Basically, this boils down to doing integration testing rather than just pure unit tests

Delay adding abstractions until really necessary.

A Personal Computer for Children of All Cultures by Ramsey Nasser

To learn programming you must already be proficient in English. This means it excludes many peoples from different cultures.

Ramsey created a programming language in Arabic to explore the idea of programming languages in other languages. It is limited in that it cannot consume libraries written in English.

Unicode enables languages to use any character, including words non-English languages, so that you can give functions, variables, etc. names in your native language.

But this doesn’t solve the problem of language keywords like if, for, function, etc.

Also, the entire computer stack is written in English. Even at the lowest levels in an OS you’ll find English.

Names have cultural and political implications. For example, there are many cities named Alexandria because they were conquered by Alexander the Great.

One possible solution is to decouple the programming constructs from the names we give them. Take git for example. It has hashes assigned for each change. A programming language could follow that pattern. It could also provide a dictionary, separate from the program and provides names to the constructs. That dictionary could contain names in many different languages that the software developer could choose from.

This is preferred over translation from English to another language because English will always be the source of truth.

The problem is that doing something like this is impossible for existing languages and requires entirely new languages that likely are incompatible with existing languages and tools.

But being more culturally sensitive is likely not enough to increase adoption of this hypothetical language. However, if that language could provide other benefits, it might be enough to encourage adoption.

Clock Skew and You by Allison Kaptur

Clock skew is very common in distributed systems. Most software developers are working on distributed systems without realizing it. E.g. web app with database is a distributed system.

Clocks are not good at staying accurate. Things like temperate, leap seconds, and more cause problems.

Clocks can be synced but networks cause problems because they are a delay. There are algorithms that allow the network delay to be calculated and taken into account.

True Chimer is a time server that is accurate.

False Ticker is a time server that is inaccurate.

Client times cannot be trusted. Always have a reasonable feedback if it is wrong. For example, if a UI shows a relative time message like “3 hours ago” based on the client time. What would happen if the client’s computer was five hours behind. The UI should not show “2 hours from now”. Maybe that means just omitting the message.

Pick a timekeeper server. DB is usually a great option.

Voice Driven Development by Emily Shea

Emily has really bad RSI and decided to try programming by voice.

This was really impressive. She drove the presentation entirely by voice. E.g. “next slide”, “start demo”

Writes mostly Perl. 🤮

She tried dozens of possible solutions for her RSI.

She uses a combination of Dragon Dictation and Talon. Talon is made by a software developer with RSI. Tailor-made for programming.

Problems with voice driven programming:

Tools with poor accessibility.

Voice strain. Some people have had to get voice coaches to ensure they are speaking properly so they don’t injure themselves talking 8 hours a day.

Open offices. Working from home is a great alternative if company supports it. Also acoustic sounds booths. There are problems from you disturbing your teammates but also from their talking affecting the computer’s ability to understand you.

You could also use a stenomask if you want to look like Darth Vader.

Emily has configured Talon to help her be quick with her programming. Very configurable for different languages and use cases.